In our latest Tech Marketing Survey Series report—SEO in the Real World—82% of tech marketers shared that they actively work to improve technical SEO on their websites. However, 1/4 of respondents were not familiar with specific concepts of technical SEO, like XML sitemaps, schema markup, and more.

So, what can we learn from this? Well, the vast majority of tech marketers understand the importance of technical SEO in ranking on Google. However, a good portion do not have the knowledge to optimize their websites around technical SEO.

This makes sense: there is a significant difference between writing keyword-rich, expert blog content on a weekly basis (on-page SEO)—which many tech marketers excel at—and the nuts and bolts of websites more familiar to developers. While it’s true that technical SEO is often associated with developers, tech marketers should not sell themselves short! A good number of technical SEO concepts are easy to grasp and implement on your website. This blog aims to cover the fundamentals of technical SEO and break down the common tasks for tech marketers and developers alike.

Information Architecture and URLs

Often, I find it helpful to incorporate figurative language when discussing SEO. Case-in-point: last year, I wrote a blog post on how organic search rankings can drop after the launch of a new website. In the blog, I introduced readers to this concept: a website is like a new house, and Google is like your next door neighbor getting acclimated to your new place. Now, my previous blog focused more on on-page SEO. Still, the idea of a new house serves our discussion of technical SEO well.

At the core of any house is its foundation, just like a website’s information architecture is at its center. Information architecture is an industry term that refers to the organization of information on a website. Most website projects begin with a sitemap (more on that later) that shows all of the proposed pages and their parent–child relationships. This yields the structure of a website’s navigation that users interact with. While nearly all software and tech company websites have a structure that is visible to the typical user, most fall short of carrying this structure over to URLs.

Here’s an Example…

Take a look at the example sitemap below. This company has a top-level page for its products and then two individual product pages. The URL slug for the top-level products page is /products/. Notice that the sitemap shows the nesting of the two individual product pages (Blitzco and Growilla) within the parent products page.

With this nesting, the URLs for this section should be: /products/blitzco/ and /products/growilla/. Often, tech marketers forget to add the proper parent-child relationships with URLs! While organizing the pages with proper nesting in a website’s navigation is important for a positive user experience, it’s critical to have a proper URL structure for technical SEO as it shows Google the organization of content on your website. Plus, proper URL structure enables you to use the Content Drilldown reports in Google Analytics to report on the effectiveness of content types, such as your entire blog or all your product pages.

Some Extra Tidbits

A few more pro tips. First, when changing the URLs (i.e., permalinks) of your website pages, always be sure to implement 301 redirects. This ensures that future users accessing an old link do not end up on a 404 page. Second, make sure you are consistent if you use trailing slashes with URLs. In other words, do not have some pages with a trailing slash and others without. This is especially important if you use HubSpot CMS as you have to manually add slashes to the slug field for pages, whereas with WordPress it is a global setting.

Third, stay on top of any orphaned pages. An orphaned page is any page on your website not linked anywhere. Often, orphaned pages result from shifting content around and not realizing older pages still exist. Lastly, ensure the proper implementation of canonical tags. These help Google understand the “original” version of a page to avoid getting flagged for duplicate content. If you use the Yoast SEO plugin on WordPress, it automatically adds canonical tags to pages.

Sitemaps and the Robots.txt File

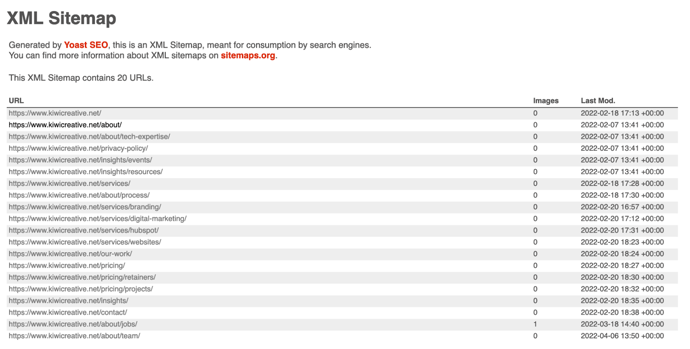

Before Google can crawl your website, it needs to understand where it is allowed to go and where it should stay out. This is where two files on your website—your sitemap and robots.txt file—come in handy. Let’s start with the sitemap. As the name implies, a sitemap is a “map” of all the content on your website. It is an XML structured data file that contains all of your indexable pages, images, and their last modified dates.

To check if your website has a sitemap, go to your homepage, and append “/sitemap.xml” to the URL. If you use a modern content management system, such as WordPress or HubSpot CMS, it probably created a sitemap for you automatically. If you use an SEO plugin, such as Yoast, you might get redirected to “/sitemap_index.xml,” which is basically a sitemap of sitemaps! Pro tip: you can also submit your sitemap to Google via Google Search Console to check the URLs against Google’s search index.

Next, the robots.txt file tells Google where it can and cannot crawl on your website. Robots.txt is a useful tool to block entire areas of your website, such as its backend. To check if your website has a robotx.txt file, append the URL with “/robots.txt” just as you did with the sitemap above. If you use WordPress as your CMS, you’ll likely notice that the file blocks access to the entire “/wp-admin” backend. It may also contain some additional disallow and allow statements. It’s important to not make any unnecessary changes to your robots.txt file as it can wreak havoc on your SEO. For example, the short “Disallow: /” statement blocks access to your entire website!

Crawling

As websites grow, URLs change, links break, and resources may move around. These produce crawl errors that tech marketers need to address to remain in Google’s good graces. While there is a plethora of crawl errors, I’ll cover some of the ones I run into most frequently on websites.

Redirects and 404 Errors

A 404 error occurs whenever a URL does not exist. I like to classify 404 errors into two categories: internal and external. An internal 404 error is a broken link on your website. With internal 404s, tech marketers can add 301 redirects to make sure users do not encounter the dreaded “404 – Page Not Found” screen when trying to travel to your website.

Now, I want to highlight two observations I’ve come across in the past with tech marketers’ websites that should be avoided. First, make sure to redirect users to a relevant page. Sometimes, tech marketers redirect broken links to the homepage for simplicity’s sake. This is poor practice. Second, some tech marketers utilize plugins to redirect any and all 404 errors to the homepage. In some cases, it is important to land on a 404 page. For example, let’s say someone types in “yourdomain.com/jgioagegagka.” This is likely not a page on your website, so the “404 – Page Not Found” screen appearing is perfectly fine.

For external 404s, you obviously do not the ability to add a 301 redirect on someone else’s site. As such, it’s a good idea to update the broken link on your website or remove it entirely. The same goes for any internal links you implement redirects for. While the 301 redirect remedies the 404 error, it adds an extra step in the user’s journey, slowing down the page load time. I recommend swapping out the broken internal links with the new destination URL.

Also, be sure that your 301 redirects have a 1:1 relationship. For particularly large websites, it is not uncommon for redirect chains to pop up. This is when there are multiple redirects in a row. Let’s say you have a redirect that goes from URL A → URL B → URL C. To eliminate this redirect chain, turn this single redirect into two, 1:1 redirects: URL A → URL C and URL B → URL C. Tools like httpstatus.io can help identify redirect chains when testing a set of URLs.

5xx Server Errors

When a webserver cannot fulfill a request, it pings an HTTP 5xx error. The most common 5xx errors are 500 (internal server error), 503 (service unavailable) and 504 (gateway timeout). Since server errors can directly block users (and Google) from accessing your website, it’s important to fix these. In many cases, 5xx errors result from misconfigurations with your webserver or having too little bandwidth and memory to support the volume of users accessing your website. I recommend talking with your webmaster to determine the relevant changes to make.

Mixed Content

Today, it is crucial for every website to have a valid SSL certificate. Most modern browsers, particularly Google Chrome, will block access to HTTP websites due to them not being secure. Most software and tech company websites have valid SSL certificates. But, if your site does not have an SSL certificate, platforms like Let’s Encrypt offer free certificates your webmaster can add to your site.

With HTTPS, it’s important to make sure all of the links on your website (internal and external) are HTTPS, too. When an HTTP link appears on an HTTPS page, this is called mixed content. Google’s Developer’s page gives an excellent overview of mixed content and the ramifications of having it on your site if you’re curious. Fortunately, most mixed content instances are easy to fix with global find-and-replace plugins!

Time to Call in a Developer

Sometimes, technical SEO updates require the help of a developer! Since Google’s release of the Page Experience Update last summer, having a fast and responsive website is critical to SEO success. Remember that Google employs mobile-first indexing, meaning that it bases your rankings on the mobile version of your website. Google has a tool available in Google Chrome called Lighthouse to test your website and pinpoint specific areas to boost the page experience. Common improvements include:

- Reducing the number of calls made in the <head>

- Minifying CSS and JavaScript

- Optimizing image file sizes and lazy-loading media on pages

- Leveraging efficient caching plugins

You can also use a developer to implement structured data on your website. Sometimes referred to as schema markup, structured data refers to special tags you can add to your website’s code to mark up certain aspects, like FAQs, events, reviews, products, and more. With structured data, you also gain the ability to appear in rich snippets on Google, which take up much more real estate on SERPs and increase the chance of users clicking through to your website.